Table of Contents

By activating the Location History in your Google account, you let Google track where you go with every mobile device. You can view and manage your Location History information through Google Maps Timeline. The data is provided in JSON format. An example of such a file is as follows.

{

"locations" : [ {

"timestampMs" : "1525373187756",

"latitudeE7" : 508402936,

"longitudeE7" : 43413790,

"accuracy" : 26,

"activity" : [ {

"timestampMs" : "1525373185830",

"activity" : [ {

"type" : "STILL",

"confidence" : 44

}, {

"type" : "IN_VEHICLE",

"confidence" : 16

}, {

"type" : "IN_ROAD_VEHICLE",

"confidence" : 16

}, {

"type" : "UNKNOWN",

"confidence" : 12

}, {

"type" : "IN_RAIL_VEHICLE",

"confidence" : 12

...

If we want to load location information into MobilityDB we only need the fields longitudeE7, latitudeE7, and timestampMs. To convert the original JSON file into a CSV file containing only these fields we can use jq, a command-line JSON processor. The following command

cat location_history.json | jq -r ".locations[] | {latitudeE7, longitudeE7, timestampMs}

| [.latitudeE7, .longitudeE7, .timestampMs] | @csv" > location_history.csv

produces a CSV file of the following format

508402936,43413790,"1525373187756" 508402171,43413455,"1525373176729" 508399229,43413304,"1525373143463" 508377525,43411499,"1525373113741" 508374906,43412597,"1525373082542" 508370337,43418136,"1525373052593" ...

The above command works well for files of moderate size since by default jq loads the whole input text in memory. For very large files you may consider the --stream option of jq, which parses input texts in a streaming fashion.

Now we can import the generated CSV file into PostgreSQL as follows.

DROP TABLE IF EXISTS location_history; CREATE TABLE location_history ( latitudeE7 float, longitudeE7 float, timestampMs bigint, date date ); COPY location_history(latitudeE7, longitudeE7, timestampMs) FROM '/home/location_history/location_history.csv' DELIMITER ',' CSV; UPDATE location_history SET date = date(to_timestamp(timestampMs / 1000.0)::timestamptz);

Notice that we added an attribute date to the table so we can split the full location history, which can comprise data for several years, by date. Since the timestamps are encoded in milliseconds since 1/1/1970, we divide them by 1,000 and apply the functions to_timestamp and date to obtain corresponding date.

We can now transform this data into MobilityDB trips as follows.

DROP TABLE IF EXISTS locations_mdb; CREATE TABLE locations_mdb ( date date NOT NULL, trip tgeompoint, trajectory geometry, PRIMARY KEY (date) ); INSERT INTO locations_mdb(date, trip) SELECT date, tgeompoint_seq(array_agg(tgeompoint_inst( ST_SetSRID(ST_Point(longitudeE7/1e7, latitudeE7/1e7),4326), to_timestamp(timestampMs / 1000.0)::timestamptz) ORDER BY timestampMs)) FROM location_history GROUP BY date; UPDATE locations_mdb SET trajectory = trajectory(trip);

We convert the longitude and latitude values into standard coordinates values by dividing them by 107. These can be converted into PostGIS points in the WGS84 coordinate system with the functions ST_Point and ST_SetSRID. Also, we convert the timestamp values in miliseconds to timestamptz values. We can now apply the function

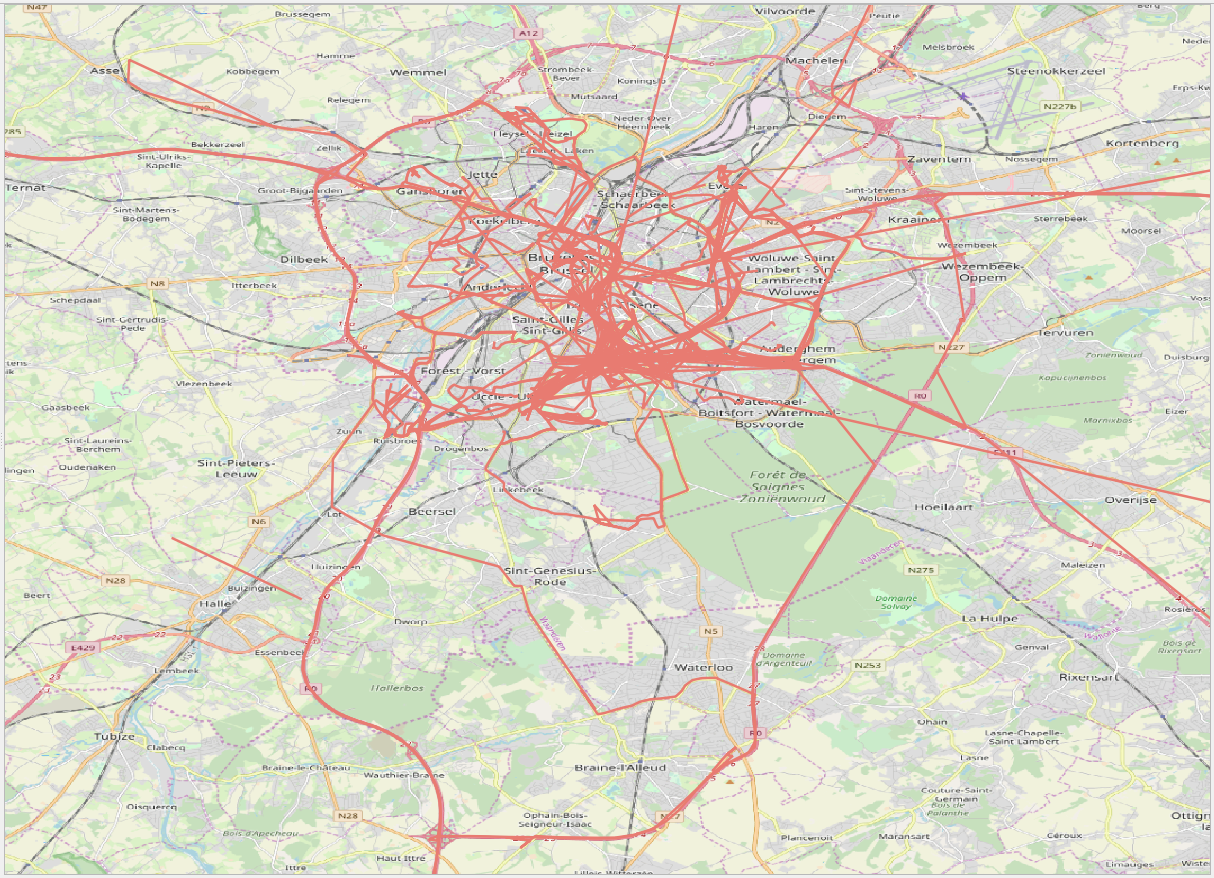

tgeompointinst to create a tgeompoint of instant duration from the point and the timestamp, collect all temporal points of a day into an array with the function array_agg, and finally, create a temporal point containing all the locations of a day using function tgeompointseq. We added to the table a trajectory attribute to visualize the location history in QGIS is given in Figure 5.1, “Visualization of the Google location history loaded into MobilityDB.”.